An overview of deep learning methods in radiology

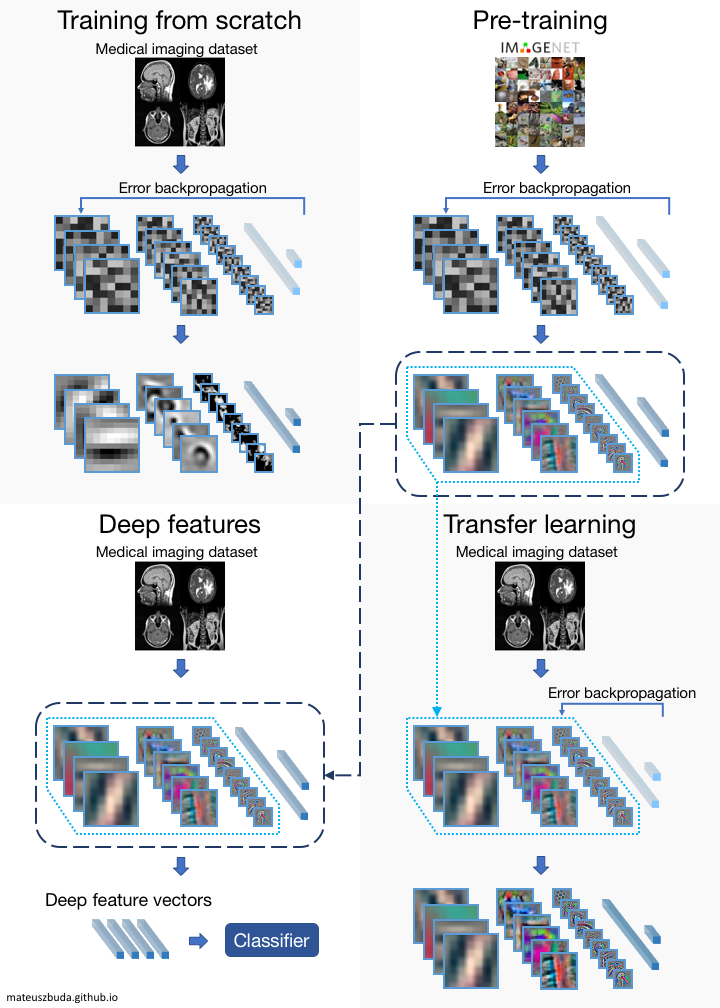

The most straightforward way of training a convolutional neural network (CNN) is to start with a random set of weights and train it using available data specific to the problem being solved (training from scratch). However, given the large number of parameters (weights) in a network, often above 10 million, and a limited amount of training data, a network may overfit to the available data, resulting in poor performance on test data. Two training methods have been developed to address this issue: transfer learning [1] and off-the-shelf features (a.k.a. deep features) [2]. A diagram comparing training from scratch with transfer learning and off-the-shelf deep features is shown in Figure 1.

Figure 1. An overview of deep learning methods. Comparison between training from scratch, transfer learning and deep features approaches.

In the transfer learning, the network is first trained using a different dataset, e.g. ImageNet. Then, the network is “fine-tuned” through additional training with data specific to the problem to be addressed. The idea behind this approach is that solving different visual tasks shares a certain level of processing such as recognition of edges or simple shapes. Another way to address the issue of limited training data is the deep features approach which uses convolutional neural networks which have been trained on a different dataset to extract features from the images. This is done by extracting activations of layers prior to the network’s final layer. Those layers typically have hundreds or thousands of outputs. Then, these outputs are used as inputs to “traditional” classifiers such as linear discriminant analysis, support vector machines, decision trees, etc. This is similar to transfer learning (and is sometimes considered a part of transfer learning) with the difference being that the last layers of a CNN are replaced by a traditional classifier and other layers are not additionally trained.

Links

- Journal paper: Journal of Magnetic Resonance Imaging

- arXiv paper: arXiv:1802.08717

References

[1] Jason Yosinski, Jeff Clune, Yoshua Bengio, and Hod Lipson. How transferable are features in deep neural networks? In Advances in neural information processing systems, pages 3320-3328, 2014.

[2] Ali Sharif Razavian, Hossein Azizpour, Josephine Sullivan, and Stefan Carlsson. CNN features off-the-shelf: an astounding baseline for recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pages 806–813, 2014.